Usha C M

Optimizing Snowflake's Data Ingestion with Advanced Snowpipe

Snowflake’s Snowpipe is a powerful tool for ingesting data continuously into the Snowflake data platform. It is designed to handle data loading in a scalable and efficient manner, enabling real-time analytics and decision-making. This document delves into advanced techniques and features of Snowpipe to optimize and enhance data ingestion processes. It allows for automated, continuous data loading from various sources, ensuring that data is available in near real-time for analysis. It leverages Snowflake’s internal compute resources to process data as it arrives, providing a seamless and efficient ingestion mechanism.

Why is Snowpipe the Best Choice?

Automation and Real-Time Loading

Continuously loads data as it arrives using an event-driven architecture.Scalability and Performance

Handles varying data loads efficiently with low latency.Ease of Use

Minimal configuration, no manual scheduling.Cost-Effectiveness

Pay-as-you-go pricing and resource optimization.Cloud Integration

Works with major cloud storage services and leverages event notifications.Security and Governance

Ensures secure data transfer and compliance.Enhanced Features

Supports data transformations, error handling, and retry mechanisms.Seamless Integration

Works well within the Snowflake ecosystem, making ingested data immediately available for analysis.Snowpipe Architecture

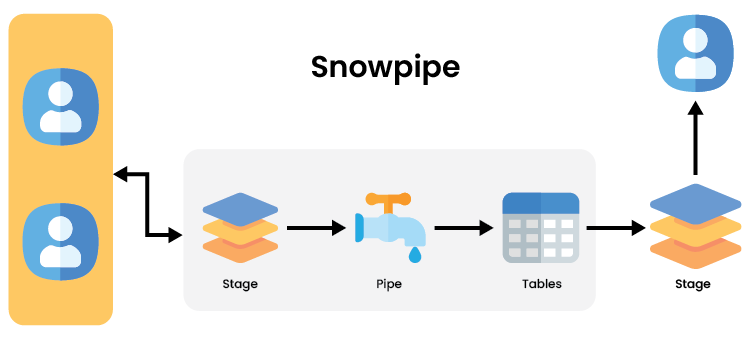

The diagram represents the architecture of Snowpipe in Snowflake, illustrating the flow of data from sources to target tables through various stages. Here’s a detailed description:

Data Sources

On the left side, the diagram starts with multiple users or systems that generate data. These users can represent different data sources such as applications, services, or databases.

Staging Areas

The data from these sources is first loaded into a staging area, depicted by the box labeled “Stage”. This staging area is typically an external storage location like AWS S3, Azure Blob Storage, or Google Cloud Storage.

Direct Loading to Tables

In the top path, data from the staging area is directly loaded into Snowflake tables. This represents a straightforward batch loading process without using Snowpipe.

Snowpipe Pipeline

In the middle path, the data from the staging area passes through a “Pipe”, symbolized by a tap. This pipe represents Snowpipe, which continuously monitors the staging area for new data files. Snowpipe automatically triggers the data loading process as new data arrives, ensuring continuous and near real-time data ingestion into the target Snowflake tables.

Additional Staging and Processing

In the bottom path, data is loaded from the staging area into Snowflake tables through an additional intermediate stage. This may represent further data processing or transformation before final loading.

Data Consumers

On the right side, the diagram shows users or systems consuming the data from Snowflake tables. These consumers can be analytics platforms, reporting tools, or other downstream applications.

The Snowpipe architecture ensures efficient and continuous data ingestion by leveraging staging areas, automatic data loading, and integration with cloud storage services, providing real-time data availability for various consumers.

Key Features for Efficient Data Loading in Snowflake

Efficient data loading in Snowflake requires a thorough understanding of its key features, which serve as the backbone for efficient operations. Let’s examine these essential features.

Concurrency Control

Optimize resource utilization and performance by controlling the level of concurrency during data loading, ensuring efficient use of computing resources.

Custom Notifications

Set up custom notifications for specific events during the data loading process, keeping stakeholders informed of important milestones or potential issues.

Data Transformation

Integrate data transformation steps using SQL or JavaScript UDFs, enabling real-time manipulation and customization of incoming data.

Dynamic Loading

Load data into tables with changing schemas or structures without manual intervention, facilitating seamless adaptation to evolving data formats.

Error Handling

Define actions for handling errors during the loading process, ensuring data integrity and minimizing disruptions to the loading workflow.

Advanced Monitoring and Logging

Track data loading tasks, identify performance bottlenecks, and troubleshoot issues efficiently, enabling proactive optimization and maintenance.

Integration with External Services

Extend functionality by integrating with AWS Lambda functions or custom APIs, enhancing the versatility of data loading processes.

Scheduled Loading

Schedule data loading tasks at specific intervals or times, automating the loading process for consistent and timely updates to the data warehouse.

Best Practices for Effective Data Ingestion in Snowflake

To ensure efficient, secure, and cost-effective data ingestion using Snowpipe, following are the best practices:

File Size Optimization

Use files between 100 MB and 250 MB to minimize costs, as smaller files result in higher costs per TB.

Buffer Settings

Adjust buffer settings for the Snowflake Connector for Kafka to balance between latency and throughput requirements.

Error Handling

Use dead-letter queues (DLQs) for managing ingestion errors and ensuring data integrity.

Schema Management

Utilize Snowpipe’s schema evolution capabilities to handle changes in data structure without manual intervention.

Monitor and Alert

Utilize Snowflake’s monitoring tools and integrate with third-party solutions to track the status and performance of Snowpipe jobs. Set up alerts to notify relevant teams of any ingestion failures or anomalies.

Secure Data Ingestion

Ensure data is encrypted both in transit and at rest to protect sensitive information. Implement fine-grained access controls to restrict who can manage and access Snowpipe and the underlying data.

Advantages of Snowpipe Over Competitors

Scalability:

Snowpipe scales seamlessly with your data needs, handling large volumes of data efficiently.

Ease of Use:

The user-friendly interface and extensive documentation make Snowpipe easy to implement and manage.

Integration:

Snowpipe integrates well with various data sources and third-party tools, providing flexibility in data ingestion.

Real-Time Capabilities:

Snowpipe’s ability to handle real-time data streams ensures timely insights and data availability.

Cost Efficiency:

With pay-as-you-go pricing, Snowpipe offers cost-effective data ingestion without compromising on performance.

Comparison Table of Snowpipe with Competitors

| Feature | Snowflake Snowpipe | AWS Glue | Azure Data Factory | Google Cloud Dataflow |

| Concurrency Control | Yes | Yes | Yes | Yes |

| Custom Notifications | Yes | Limited | Yes | Limited |

| Data Transformation | SQL/JS UDFs | Python/Scala | Mapping Data Flows | Apache Beam |

| Dynamic Loading | Yes | Yes | Yes | Yes |

| Error Handling | Advanced | Basic | Advanced | Basic |

| Monitoring and Logging | Extensive | Limited | Extensive | Extensive |

| Integration with Services | AWS Lambda, APIs | AWS Services | Azure Services | Google Services |

| Scheduled Loading | Yes | Yes | Yes | Yes |

| Real-time Streaming | Kafka, Kinesis | Kinesis, Kafka | Event Hubs | Pub/Sub |

| Data Quality Monitoring | Real-time | Limited | Real-time | Limited |

Real-World Use Cases and Examples of Snowpipe

Use Case 1: Healthcare Data Ingestion and Analysis

Scenario: A healthcare organization needs to continuously ingest patient and policy data from various sources, including EMR systems and insurance databases. The goal is to ensure real-time data availability for patient monitoring, policy management, and analytics.

Solution:

- Automated Data Ingestion: Use Snowpipe to automatically ingest patient records, policy details, and medical data from external cloud storage into Snowflake.

- Data Transformation: Apply transformations to standardize medical policy numbers, calculate premiums, and update policy statuses during ingestion.

- Monitoring and Alerting: Implement monitoring tools to track ingestion performance and set up alerts for any anomalies or failures.

- Schema Evolution: Utilize Snowpipe’s schema evolution capabilities to accommodate changes in patient records and policy data without disrupting the ingestion process.

Use Case 2: Retail Sales Data Streaming

Scenario: A retail company needs to continuously ingest sales transactions from multiple point-of-sale systems to perform real-time sales analysis, inventory management, and trend forecasting.

Solution:

- Continuous Loading: Set up Snowpipe to continuously load sales data from cloud storage into Snowflake tables as transactions occur.

- Optimized File Sizes: Batch small transaction files into larger ones to improve ingestion performance.

- Data Partitioning: Use efficient partitioning strategies to optimize query performance and reduce storage costs.

- Error Handling: Implement automatic retry mechanisms for transient errors to ensure data is ingested without interruption.

Use Case 3: IoT Data Processing for Smart Cities

Scenario: A city management team needs to ingest and analyze data from various IoT sensors (e.g., traffic cameras, environmental sensors) to optimize city operations and enhance public safety.

Solution:

- Automated Ingestion: Use Snowpipe to continuously ingest data from IoT sensors into Snowflake for real-time analysis.

- Schema Evolution: Utilize schema evolution to accommodate new sensor types and data formats without disrupting the ingestion process.

- Real-Time Analytics: Implement real-time analytics dashboards to monitor traffic patterns, air quality, and other critical metrics.

- Cost Management: Use resource monitors to manage the cost of data ingestion and optimize storage costs by archiving historical data.

Conclusion

In today’s fast-paced and competitive product development landscape, prototyping is essential for success. It provides a structured approach to turning ideas into tangible solutions, helping organizations reduce costs, speed up time to market, improve product quality, and enhance user satisfaction. The collaborative nature of prototyping fosters stakeholder alignment and cross-functional teamwork, leading to more innovative and user-centric products. Embracing the power of prototyping is not just a strategic advantage; it is a crucial step toward creating products that incorporate user experience and design principles and thrive in the market. As the digital age continues to evolve, prototyping in design thinking will remain a beacon of innovation, guiding visionary teams from concept to creation.