Nitinkumar Ambulgekar

Protecting Secrets in-use with Confidential Computing

With the emergence of Cloud Native Architecture, organizations are shifting towards distributed deployments of their applications on Public Cloud and Edge platforms. A notable transition from in-house systems to cloud-based infrastructure is underway, leading to a substantial uptick in adopting Cloud Native principles and technologies by numerous businesses spanning different industries.

Developers worldwide are championing Cloud Native approaches such as DevSecOps, continuous delivery, containers, and microservices, as they have gained widespread acceptance. They are vital foundational elements in digital transformation, enabling enterprises to deliver applications to address customer demands swiftly. Cloud Native solutions are driving a fresh wave of business expansion.

While the benefits of cloud-native methodologies are substantial and play a pivotal role in industrial digitalization, they also present notable security challenges related to secrets management. These challenges include the proliferation of secrets, decentralization, absence of centralized secrets control and revocation, limited insight into secrets usage across segments, and the requirement to support traditional IT systems and Cloud-Native environments. Do not underestimate or disregard these issues.

HashiCorp Vault for Secret Management and Data Encryption

This versatile solution is a repository for diverse secret types, encompassing delicate environment variables, database credentials, API keys, and more. Users gain authority over permitting or denying access while also enjoying the ability to revoke and refresh access privileges as needed. Vault furnishes comprehensive oversight, granting the power to grant access to critical credentials and subsequently withdraw or alter that access at will.

Even while handling Kubernetes-based secrets, which might be stored in plaintext, Vault keeps secrets in an encrypted state. It enables applications to query the Vault for decryption. Vault operates as an “encryption as a service” provider, employing AES 256-bit CBC encryption to secure data at rest and TLS to safeguard data in transit. This double-layered defense shields sensitive data from unauthorized access during its journey across networks and while it resides in cloud and data center storage.

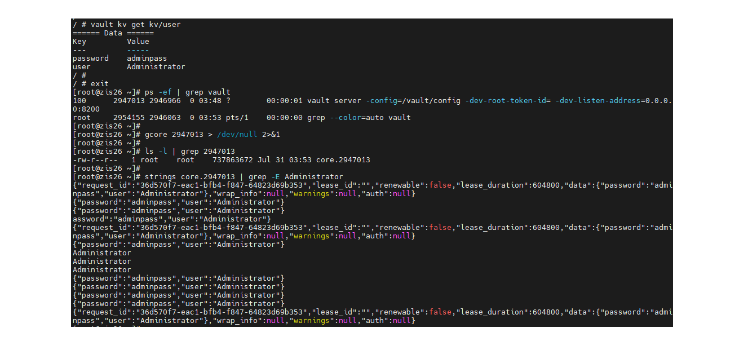

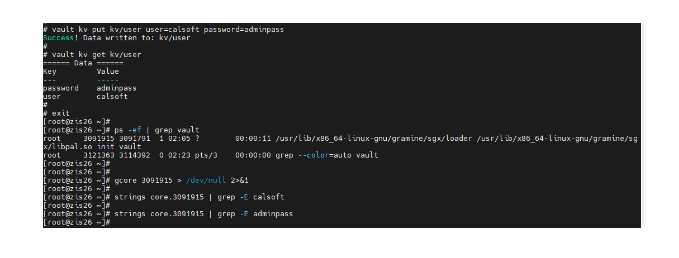

But a pertinent query arises: “Why would confidential computing be necessary if Vault already secures data in transit and at rest?” Confidential computing is primarily concerned with safeguarding data during computation while it’s “in use.” It becomes particularly vital when applications operate in a shared public cloud or edge environment, potentially coexisting with other applications from different vendors, some of whom might possess privileged access. In such cases, even though secrets are kept in encrypted form within Vault, and access is limited to authorized tokens, individuals with elevated system privileges could retrieve them by utilizing memory dumps. It is because Vault stores secrets in its active memory.

For instance, consider a scenario where Vault runs within a Docker container, and secrets are stored in an essential vault pair configuration utilized by an application. If a person with privileged access gains access to a memory dump of the Vault process, they could employ simple Linux commands to extract the secrets.

Gramine with Vault for confidential computing using Intel SGX Enclaves

What is Gramine?

Gramine is adept at orchestrating Linux applications through Intel SGX (Software Guard Extensions) technology. It adeptly facilitates the execution of unaltered applications within SGX enclaves, alleviating the cumbersome task of manually adapting the application for the SGX environment. This shielded environment provided by the Gramine SGX enclave confers robust protection against potential malignancies originating from the host.

Moreover, Gramine extends its functionality to encompass local and remote attestation, a mechanism that verifies the authenticity and integrity of the SGX enclave where the application is actively functioning.

Running Vault in SGX Enclave using Gramine

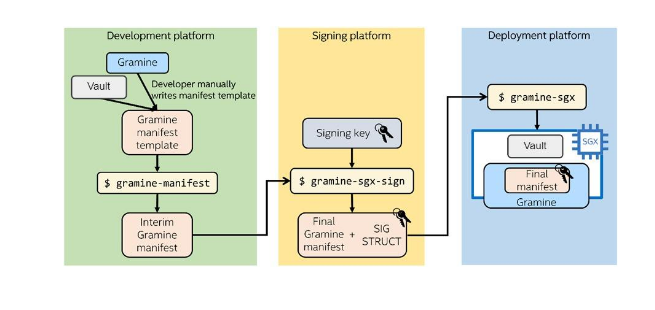

Gramine furnishes a manifest file that serves as a customized configuration blueprint, essential for launching applications within the protective confines of an SGX enclave. This manifest file is meticulously analyzed by Gramine, extracting vital particulars about the application itself, encompassing Intel SGX enclave attributes and external prerequisites.

Following the formulation of this manifest file, the Gramine user can trigger specialized tools. Designed for generating SGX- and Gramine-specific files, these tools carry out the essential task of attesting and signing the enclave. These combined efforts culminated in establishing an environment conducive to securely executing the application within the SGX enclave. The diagram presented below visually elucidates the sequential progression of these steps.

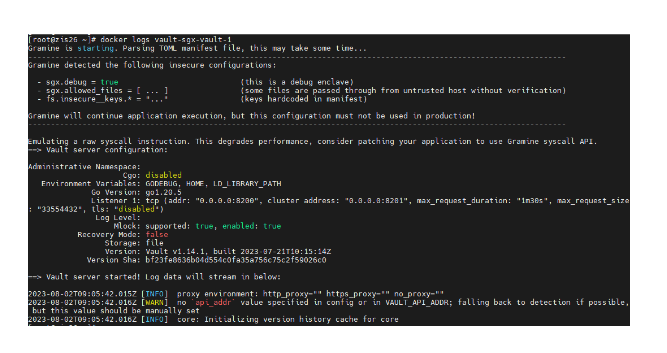

With Vault operating within an SGX enclave, the security measures are such that even privileged access and attempts at memory dump retrieval are rendered ineffective in accessing the secrets.

This setup demonstrates its versatility by accommodating both standalone applications and applications operating within Kubernetes Pods, ensuring a robust and secure means of accessing credentials and secrets. In the Kubernetes context, Vault can be situated within a Pod or configured as a dedicated service for a Vault container that operates externally to the cluster. This adaptability underscores its effectiveness in various deployment scenarios, reinforcing the commitment to maintaining data confidentiality.

Conclusion

Cloud Native Architecture has revolutionized the landscape of application development, enabling us to harness the full potential of cloud technology. However, to ensure a seamless transition, we must establish a robust security foundation matching the protection level seen in conventional IT environments. Overlooking this aspect puts our critical business assets in jeopardy.

The present-day scenario sees applications broken down into agile, compact, and widely dispersed components. This fragmentation vastly expands the potential points of attack. Consequently, it becomes imperative to verify the identity of each of these components and safeguard the sensitive information they house. This precaution is vital to thwarting future security breaches.

In conjunction with confidential computing, Vault presents an exceptionally secure solution that empowers organizations to fortify their valuable data with hardware-based security. This approach minimizes the attack surface, thanks to using Intel SGX enclaves. Moreover, Vault’s inclusion of a Public Key Infrastructure (PKI) engine bolsters its capabilities. This versatile feature allows Vault to operate as an external Certificate Authority (CA), thereby facilitating the establishment of secure TLS/mTLS communication between microservices running within the cloud-native platform. This multi-faceted approach underscores Vault’s significance in safeguarding information and fortifying the security framework.

References

https://www.hashicorp.com/products/vault/secrets-management

https://gramine.readthedocs.io/en/stable/

https://www.intel.com/content/www/us/en/architecture-and-technology/software-guard-extensions.html

https://techcommunity.microsoft.com/t5/azure-confidential-computing/developers-guide-to-gramine-open-source-lib-os-for-running/ba-p/3645841

https://github.com/enclaive/enclaive-docker-hashicorp-vault-sgx

Related Insights

How Generative AI is Transforming Project Risk Management

Death to Prompting! Long Live Programming!

The Architecture of Agentic RAG: Reasoning-Driven AI Systems Explained

The Rise of CloudOps: Managed Services Driving Cloud-Native Success