Bhanu Pratap Singh

14 Minutes read

The Role of Generative AI in Test Automation Enhancing Efficiency and Effectiveness

In today’s rapidly evolving digital landscape, delivering high-quality software solutions is crucial for businesses to remain competitive. Test automation has long been a cornerstone of software development, enabling organizations to streamline testing processes and accelerate time-to-market. However, as applications become more complex, traditional test automation approaches face challenges in keeping up with evolving testing needs. This is where Generative Artificial Intelligence (AI) comes into play, offering a transformative solution that can enhance the efficiency and effectiveness of test automation. In this blog post, we will explore the role of Generative AI in test automation and its potential to drive business success by improving software quality, reducing costs, and enhancing customer satisfaction.

Common Challenges Faced in Traditional Test Automation

Traditional test automation has long been a cornerstone in improving software quality and efficiency. However, despite its advantages, many teams struggle with the limitations and complexities that come with implementing and maintaining automation frameworks. Below are some of the most common challenges that can slow down development, increase costs, and impact the overall effectiveness of automated testing.

Slow to Write

Writing automated tests can be a time-consuming process, especially for complex applications. The need to account for various scenarios, edge cases, and ensuring the stability of tests requires careful planning and meticulous coding, which can significantly slow down the development cycle.

Expensive

Implementing and maintaining a robust test automation suite can be costly. The expenses include not just the tools and infrastructure but also the time and expertise required to develop and manage the tests. This investment may seem steep, particularly when the return on investment isn’t immediately visible.

Brittle

Automated tests are often brittle, meaning they can easily break when there are minor changes in the application. This brittleness can lead to false positives or negatives in test results, requiring constant updates and maintenance, which adds to the overall burden of test automation.

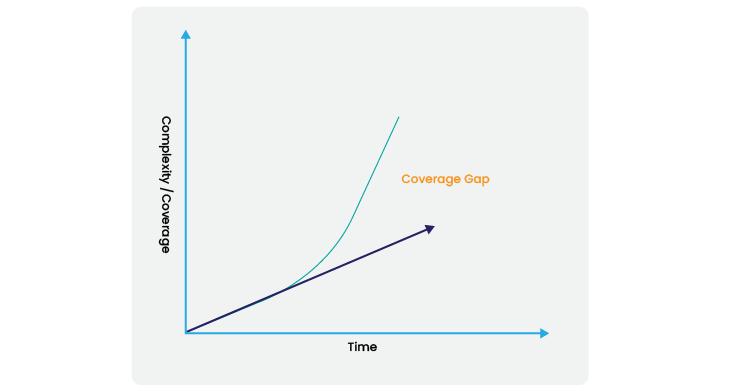

Feature vs. Coverage Gap in Test Automation

One of the challenges in test automation is the feature vs. coverage gap, where addition of new features and automated tests may not fully cover all aspects of an application’s functionality. This gap can lead to untested areas and potential bugs slipping through, making it crucial to balance feature testing with comprehensive coverage.

Understanding Generative AI

Generative AI leverages advanced machine learning techniques to generate new content, outputs, or solutions based on patterns and information learned from extensive data sets. Unlike traditional approaches that rely on predefined rules, generative AI models learn from vast amounts of data and can generate novel outputs based on that learning. This unique capability makes generative AI a powerful tool for businesses looking to optimize their test automation processes.

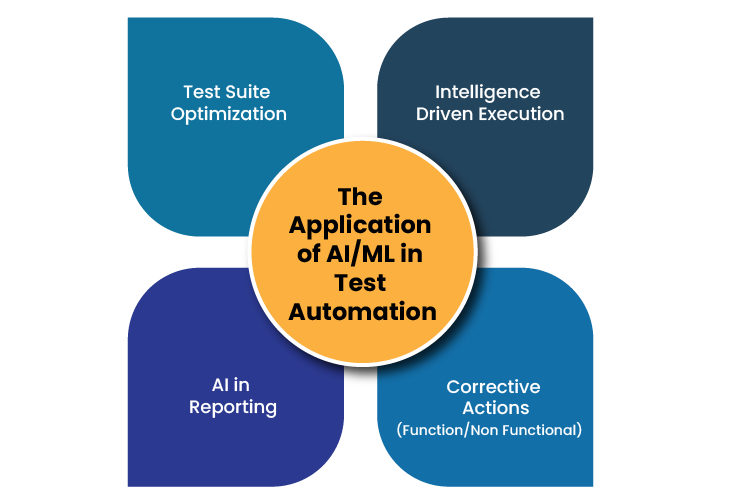

The Application of AI/ML in Test Automation

Before exploring how generative AI can enhance efficiency and effectiveness in test automation, let’s first briefly consider the areas where Artificial Intelligence and machine learning have already added significant value to test automation over the past decade.

Test Suite Optimization

- Identify impacted features and schedule test on the fly

- Impact of defect in other test cases (auto skip enabled)

Intelligence-driven Execution

- Retest/ rerun failed test cases (if needed)

- Identify potential security risks or performance bottlenecks even if the intended tests are functional

- Identify failed objects using a prescribed technique

- Handle environment issues if occurs

AI in Reporting

- Publishing Interactive dashboards

- Defect trends

- Distinguish error Vs Failure

Corrective Actions

- Alternate route incase of failures

- Report to respective stakeholders

Now let’s explore the role generative ai can play in Test automation:

Enhancing Efficiency in Test Automation

Automated Test Case Generation

Efficient test case generation is crucial for effective software testing. Traditional approaches often rely on manual analysis and rule-based techniques, which can be time-consuming and may not cover all possible scenarios. Generative AI models, on the other hand, can learn from existing test cases, system behavior, and specifications to automatically generate new test cases. By leveraging machine learning algorithms, these models can identify patterns, infer dependencies, and generate test cases that provide comprehensive coverage. This automated test case generation reduces the manual effort involved in test design and ensures broader test coverage, allowing businesses to detect potential issues early on and reduce the risk of software failures or downtime.

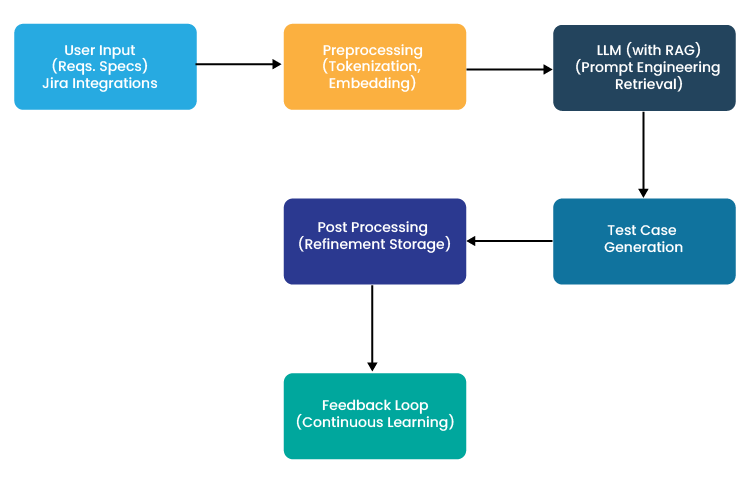

Below is the high level architecture for a test case generation system using generative AI

Architecture Overview

The architecture leverages Generative AI (using a Large Language Model, LLM) for generating test cases in a software development environment. The key elements include:

Input Interface:

- User Input: The interface where users provide input, such as software requirements, user stories, or system specifications, that need to be tested.

- Existing Test Data: Pre-existing test cases or related information that can be used for context.

Preprocessing:

- Natural Language Processing (NLP): The LLM is employed to process and understand the input data. This involves tokenization, embedding, and positional encoding to convert the text into a machine-understandable format.

- Embedding Techniques: Embedding transforms the input data into a vector space where relationships between terms can be captured. Contextual embedding could be used to capture both syntax and semantics.

LLM with Customization Techniques:

- Prompt Engineering: Tailoring prompts to effectively guide the LLM to generate relevant test cases based on the input. This involves the creation of prompt templates and fine-tuning to ensure accurate and useful outputs.

- Retrieval-Augmented Generation (RAG): This technique retrieves relevant information from a knowledge base (e.g., past test cases or domain-specific documentation) and augments it with the LLM’s capabilities to generate more accurate and domain-specific test cases.

- Model Selection: Use an LLM such as LLaMA or GPT-4 based on the size and complexity of the test cases required.

Test Case Generation:

- Output Generation: The LLM generates test cases based on the processed input and augmented data. This output includes various test cases with expected results for different scenarios.

- Quality Check: Applying validation to ensure the generated test cases align with best practices and cover the necessary scenarios effectively.

Post Processing:

- Test Case Refinement: Post-generation, the test cases can be refined, optimized, or adjusted based on feedback or additional requirements.

- Test Case Storage: The generated and refined test cases are stored in a repository, making them available for future use and reference.

Feedback Loop:

- Continuous Learning: As more test cases are generated and used, feedback on their effectiveness is collected and used to further fine-tune the LLM and prompts. This creates a continuous improvement loop.

Automated Test Case Generation Architecture Diagram

Below is simplified conceptual diagram to illustrate the architecture:

Components Breakdown

User Input and Preprocessing:

- Utilizes NLP techniques to understand and embed the input data.

- Incorporates contextual embeddings to grasp the nuances and specific context of the requirements.

LLM (Large Language Model):

- Powered by state-of-the-art LLMs like LLaMA or GPT-4.

- Employs Prompt Engineering to guide the model for relevant test case generation.

- Uses RAG to integrate domain-specific knowledge for more accurate test generation.

Postprocessing and Feedback:

- The test cases generated by the LLM are further refined and optimized.

- A feedback loop allows continuous learning and improvement of the model based on real-world usage and outcomes.

This architecture is designed to leverage the strengths of generative AI, particularly in handling complex and context-sensitive tasks like test case generation, while ensuring that the system remains adaptable, accurate, and efficient in a real-world software development environment.

User Input and Preprocessing:

- Utilizes NLP techniques to understand and embed the input data.

- Incorporates contextual embeddings to grasp the nuances and specific context of the requirements.

Rapid Adaptation to Changes:

Traditional test automation often struggles with brittleness, where even minor changes in the application can break multiple tests, requiring significant maintenance. Generative AI can automatically adjust and regenerate test cases based on changes in the application, drastically reducing the maintenance burden and ensuring that the tests remain up-to-date with the latest code.

Optimizing Test Coverage:

Generative AI can analyze which areas of the application are most critical and generate test cases that focus on those areas, ensuring optimal test coverage. This approach not only saves time but also ensures that critical features are thoroughly tested, reducing the risk of bugs slipping through.

Enhancing Effectiveness in Test Automation

Beyond efficiency, generative AI also plays a crucial role in enhancing the effectiveness of test automation:

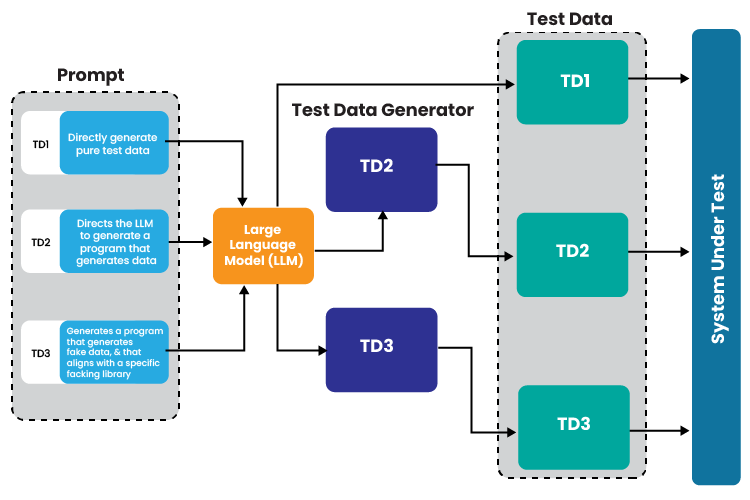

Intelligent Test Data Generation : One of the critical aspects of test automation is the availability of diverse and realistic test data. Generative AI significantly enhances test data generation by creating synthetic data that closely resembles real-world scenarios. By analyzing patterns in existing data sets, generative AI models can generate new data points that cover a wide range of test cases. This enables businesses to simulate various scenarios and uncover potential issues that may not have been identified with limited or static test data.

An example of the approach for generating fake test data with LLMs involves three key steps:

- Designing Prompts: The prompts define the testing domain, cultural constraints, and the programming language for the test generators. Three types of prompts are used:

- TD1: Directly generates pure test data (e.g., addresses in Pune).

- TD2: Generates a program that creates data (e.g., a Java program for generating addresses ).

- TD3: Creates a program that generates data compatible with a specific faking library (e.g., an address generator for Faker.js).

- Executing the Data Generation Code: For TD2 and TD3 prompts, the generated code must be executed to produce the test data.

- Using the Generated Data: The generated test data is then used as input in test cases for systems under test

Prompts can be created in English or the local language of the system under test. Using the local language is recommended to ensure cultural relevance, but English can be used if the quality of the generated data is insufficient.

Automated Bug Generation and Detection : Bugs and software vulnerabilities can have severe consequences for businesses, leading to costly rework, negative customer experiences, and reputational damage. Generative AI can play a crucial role in automated bug generation and detection, enabling businesses to proactively identify and resolve potential issues before they impact end-users. Generative AI models can simulate various inputs and scenarios to uncover potential bugs by exploring different paths and combinations. By doing so, they can identify edge cases, boundary conditions, and unusual scenarios that human testers might overlook.

Popular Tools that Supports Generative AI for Test Automation

| Tool | Key Features | Strengths | Platforms Supported |

| Functionize | Self-healing end-to-end cloud-scalable tests, low-code, integrates with CI/CD. | Reduces QA overhead, faster testing at scale. | Web, cloud ,APIs |

| Testsigma | AI-powered test automation, supports web, mobile, desktop apps, APIs, integrates with CI/CD pipelines. | Accessible plain English automation, covers multiple platforms, seamless test management. | Web, mobile, desktop, APIs |

| Katalon Studio | AI visual testing, runs functional and visual tests in parallel, reduces false positives. | Integrated UI testing, smart comparisons for design changes. | Web, mobile, Desktop, APIs |

| Applitools | Visual AI-powered test automation, reduces manual work, large-scale testing. | Reduces time for test creation and execution, high-scale testing across applications. | Web, mobile |

| Digital.ai Continuous Testing | Scalable AI-driven functional and performance testing, cloud-based, user-friendly Test Editor. | Comprehensive cloud-based solution, fast testing cycles, easy test creation. | Cloud, web, mobile |

| TestCraft | Codeless Selenium-based testing, AI/ML technology, cloud-based collaboration. | Suitable for non-technical users, reduces maintenance efforts, supports remote work. | Web |

| Testim | AI-powered fast test authoring, stabilizes flaky tests, efficient scaling. | High-speed test creation, robust control over testing operations. | Web Mobile |

| mabl | Low-code AI test automation, integrates load testing, shifts performance testing earlier. | Reduces infrastructure costs, high-velocity team collaboration. | Web, Mobile , APIs, performance testing |

| Sauce Labs | Low-code web testing, AI-powered, empowers non-programmers, fosters collaboration. | Empowers citizen testers, easy automation of web tests. | Web, Mobile ,cloud |

| Tricentis | AI and cloud-powered test automation, risk-based, codeless approach, boosts release confidence. | Comprehensive test suite, improves visibility, shortens test cycles. | Web, mobile, desktop |

| ACCELQ | AI-powered codeless cloud automation, multi-channel (Web, Mobile, API), business-process-focused. | Focuses on real-world complexities, business process automation. | Web, mobile, APIs |

| TestRigor | User-centric end-to-end test automation, identifies screen elements, stable tests for mobile and desktop browsers. | Highly stable tests, allows complex test creation without coding. | Web, mobile, Desktop, APIs |

Challenges and Considerations Generative AI in Test Automation

While generative AI brings significant benefits to test automation, businesses need to be aware of certain challenges and considerations.

Data Quality and Reliability:

- Generative AI’s performance depends on the quality and diversity of its training data.

- Ensuring representative and comprehensive data sets is crucial for accurate outputs.

Collaboration:

- Successful integration of generative AI in test automation requires collaboration between AI experts, testers, and developers.

Transparency and Interpretability:

- AI model transparency is essential, especially in regulated industries or when handling sensitive data.

- Businesses must understand and trust AI-generated decisions to ensure reliability and compliance.

Future of Generative AI in Test Automation

As generative AI continues to evolve, its role in test automation will expand, introducing innovative capabilities that go beyond current practices. Here are some key trends that will shape the future of test automation:

AI-Powered Autonomous Testing:

- Generative AI will move toward fully autonomous testing, where tests are created, executed, and maintained without human intervention. The AI will not only generate tests based on code changes but also determine when to run them, analyze the results, and refine future test cases based on outcomes.

Context-Aware Testing:

- Future AI models will incorporate more contextual awareness, understanding business logic and user intent. This will enable more intelligent test creation that aligns with real-world scenarios, ensuring that testing is relevant and mirrors actual user behavior.

Predictive Failure Detection:

- Beyond just detecting bugs, generative AI will predict where potential failures might occur based on historical data and code analysis. This proactive approach will help development teams address issues before they manifest in the live environment, minimizing downtime and improving software reliability.

Continuous Learning and Adaptation:

- As AI models become more sophisticated, they will continuously learn from testing outcomes. Over time, this adaptive learning will enable the system to generate more accurate test cases and optimize testing strategies based on evolving application behavior.

Human-AI Collaboration:

- Rather than replacing manual testers, generative AI will augment human efforts by handling repetitive tasks, while humans focus on more complex, creative, and strategic testing activities. This will create a hybrid testing model where AI and human testers collaborate to achieve maximum efficiency and effectiveness.

Enhanced Security and Compliance Testing:

- Generative AI will increasingly focus on automating security and compliance tests, identifying vulnerabilities and ensuring regulatory requirements are met. This will be particularly important for industries with stringent security and privacy standards.

Multi-Platform and Cross-Environment Testing:

- AI will streamline the complexity of testing across multiple platforms, devices, and environments. By understanding the nuances of different platforms (e.g., mobile, cloud, IoT), AI will generate tailored test cases that ensure comprehensive coverage across all environments.

Conclusion

Generative AI offers a powerful solution for businesses aiming to enhance their test automation processes and drive business success. By leveraging generative AI in test data generation, test case generation, and automated bug detection, businesses can optimize their testing efforts, improve software quality, and reduce costs. This, in turn, leads to higher customer satisfaction, faster time-to-market, and a competitive advantage in the market. Embracing generative AI in test automation is a strategic move for businesses seeking to deliver high-quality software solutions while maximizing efficiency and achieving business objectives.

For the latest updates or additional information, feel free to contact ACL Digital.

Related Insights

How Generative AI is Transforming Project Risk Management

Death to Prompting! Long Live Programming!

The Architecture of Agentic RAG: Reasoning-Driven AI Systems Explained