Jayshree Rathod

5 Minutes read

Beyond the Black Box: The Role of Explainable AI (XAI) in Enabling Ethical and Responsible AI Adoption

We live in a transformative era where Artificial Intelligence (AI) and Machine Learning (ML) fundamentally reshape industries and push the boundaries of what’s possible. These technologies enhance efficiency and provide invaluable predictions that inform strategic decisions, unlock future opportunities, and mitigate potential risks across various sectors. From predicting market trends to diagnosing diseases, AI is becoming an indispensable tool.

However, as we embrace AI’s capabilities, the true power of these systems extends beyond their ability to make predictions—it’s in their explainability. In other words, knowing what AI predicts is not enough. Accuracy is non-negotiable in critical and high-stakes domains like healthcare, finance, defense, and autonomous systems, accuracy is non-negotiable. Unquestioningly trusting AI’s predictions without understanding their rationale can be perilous, especially when these decisions profoundly impact lives and resources.

This is where Explainable AI (XAI) comes into play. XAI ensures transparency, providing insights into the decision-making processes of AI models. By making the reasoning behind AI’s predictions more understandable, XAI helps uncover potential biases, validate decisions, and ensure that the outcomes align with ethical and regulatory standards. For instance, understanding why an AI model suggests a particular treatment in healthcare can be as important as the prediction itself. In finance, knowing how an algorithm assesses risk can help regulators and stakeholders ensure compliance with fair lending practices.

Furthermore, XAI allows for continuous model improvement. By understanding the “why” behind AI’s decisions, data scientists and engineers can refine algorithms, correct errors, and optimize their performance. This level of transparency fosters trust, especially when AI systems are being integrated into environments where human safety, financial security, and national defense are at stake.

Ultimately, the ability to explain AI’s predictions and decisions is not just a matter of technical accuracy; it’s about building a system of accountability. It ensures that AI models are not operating as “black boxes” but are instead open, auditable, and accountable systems. This is crucial for AI’s ethical adoption and ensuring its responsible use in applications where precision and trust are paramount. Through explainability, we can more confidently deploy AI technologies in these high-stakes sectors, ensuring that the technology aligns with societal values and can be trusted to make decisions that impact lives, economies, and communities.

The image below compares traditional AI/ML models with Explainable AI (XAI):

- Traditional AI/ML

The model learns from training data and produces a function that provides decisions or recommendations but without transparency. - XAI

In addition to the learning process, it includes an explainable model and an explanation interface, ensuring users understand how and why decisions are made and improving trust and accountability.

XAI effectively addresses the questions that AI engineers encounter while training models using traditional methods. It not only provides answers but also bridges the gap between two ways of thinking—the AI’s reasoning and the AI engineer’s understanding.

Traditional ML Vs XAI

XAI techniques

There are many XAI available, but the techniques below are the most early. To understand how XAI works, let’s examine one in detail: LIME techniques.

- LIME (Local Interpretable Model-agnostic Explanation)

- RETAIN (Reverse Time attention) Model

- LRP (Layer-wise Relevance Propagation)

How LIME Works (Step-by-Step)

- Perturbing Inputs LIME slightly modifies the input data (e.g., changing feature values) to create variations.

- Generating Predictions The original AI/ML model makes predictions on these modified inputs.

- Fitting a Simple Model LIME trains an interpretable model (e.g., linear regression) to approximate the local decision boundary.

- Identifying Important Features The model assigns importance scores to features, showing which factors influenced the prediction.

- Visualizing Explanations The results are presented understandably, helping users interpret AI decisions.

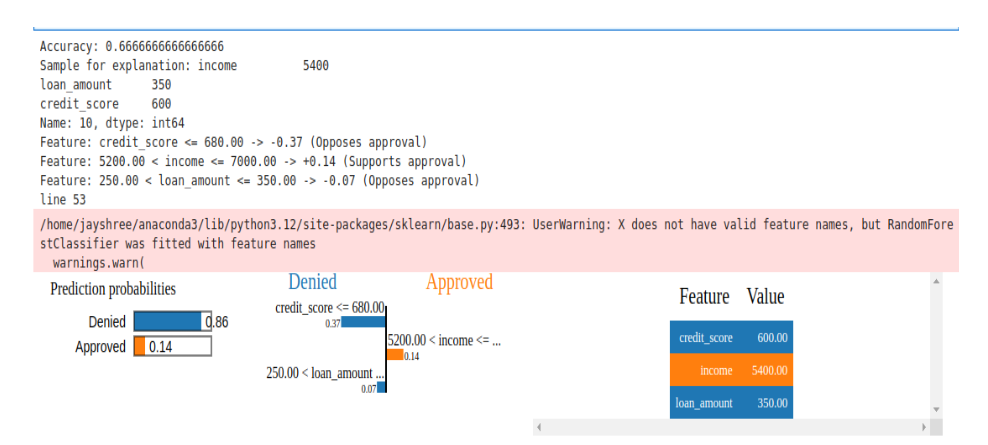

Experimental Results of the LIME XAI Technique

From the above experimental results, one can understand that

- Credit Score & Loan Amount negatively impact approval.

- Income slightly supports approval.

RETAIN, developed by the Georgia Institute of Technology, is an interpretable deep-learning model designed to predict heart failure. Using an attention-based mechanism, it highlights key clinical data influencing predictions, enhancing trust in AI-driven healthcare.

Layer-wise Relevance Propagation (LRP) improves AI transparency by working backward through neural networks to identify the most relevant input features, making predictions more interpretable.

The above experiment shows how the LIME method helps to understand the essential features that positively and negatively impact the results.

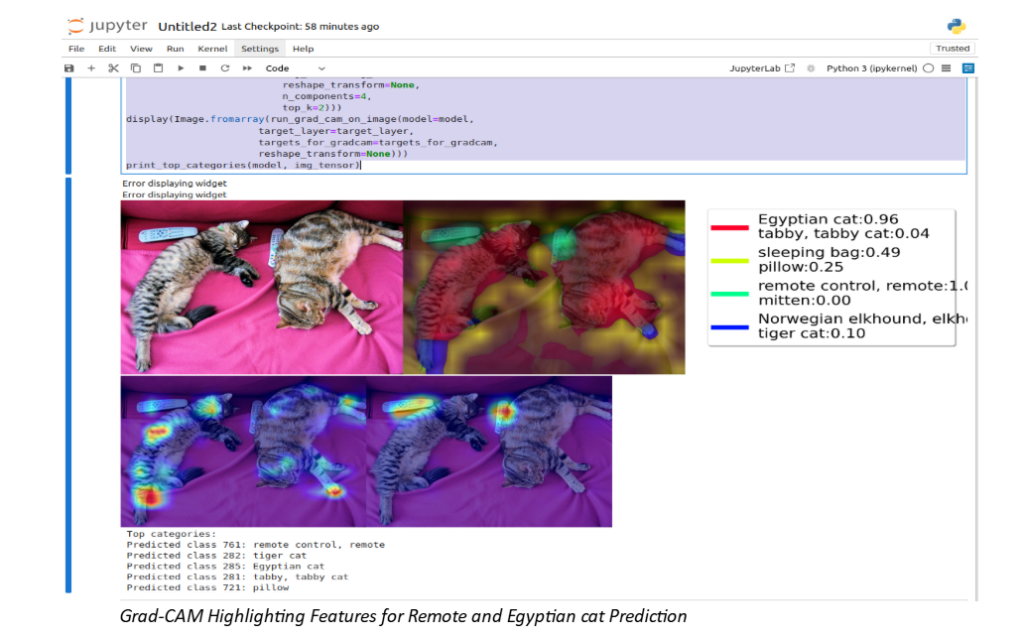

Let’s look at one more example that will help us understand how the XAI technique is helpful in computer vision applications.

Gradient-weighted Class Activation Mapping (Grad-CAM) is a powerful technique that utilizes the gradients of a specific target flowing through the convolutional network to localize and highlight relevant regions in the image.

Grad-CAM holds significant potential in various computer vision tasks, including image classification, object detection, semantic segmentation, image captioning, and visual question answering. Moreover, it aids in interpreting deep learning models by providing insight into their predictions’ rationale— answering the question, “Why did the model predict this outcome?”

This technique offers a clear visual explanation of the model’s behavior by generating the following for the target category:

- Class-discriminative localization

It highlights the specific regions in the image that contribute to the classification of the target category. - High resolution

It captures fine-grained details, enhancing the precision of the localized features in the image.

Grad-CAM thus enables a deeper understanding of the inner workings of convolutional neural networks and provides more transparency in model predictions.

Providing explanations for individual predictions is essential. Highlighting specific features that led to a particular decision helps users understand why the model arrived at that outcome. In the picture below, the highlighted parts show the essential features that allow the model to predict the class.

Some Industry Use Cases of Grad-CAM

- Healthcare & Medical Imaging

Highlights regions in medical images for diagnosis (e.g., tumors, fractures). Assists pathologists in understanding focus areas in tissue samples. - Autonomous Vehicles

Visualizes regions the model focuses on for object detection (e.g., road signs, pedestrians). Helps understand path planning and obstacle recognition decisions. - Retail & E-commerce

Explains features driving product recommendations (e.g., colors, shapes). Improves visual search by showing why certain items are suggested. - Security & Surveillance

Explains facial recognition by highlighting important facial features. Identifies suspicious activity in surveillance footage.

XAI: A Legal Mandate, Not Just a Need

Explainable AI (XAI) has evolved from technological advancement to a critical legal necessity. It is essential for transparency in AI systems and plays a significant role in ensuring legal compliance, fostering accountability, and establishing trust in AI-driven decisions. As AI systems are increasingly being deployed across various sectors, the need for explainability has become a legal mandate to ensure fairness and protect individuals’ rights.

Key Regulations Supporting XAI

- GDPR (Europe, 2018)

The General Data Protection Regulation (GDPR) grants individuals the Right to Explanation (Article 22), which allows people to challenge and question AI-driven decisions, particularly those related to profiling and automated decision-making. This ensures that individuals can seek human intervention if they feel AI has unfairly impacted them, promoting accountability in AI systems. - US Equal Credit Opportunity Act (1974)

In the US, the Equal Credit Opportunity Act requires credit agencies to disclose key factors influencing credit scores. With AI increasingly used in credit scoring, institutions like FICO are now incorporating explainability into their systems, giving customers transparency on how their creditworthiness is evaluated. - France Digital Republic Act (2016)

This regulation mandates that all AI-driven decisions impacting individuals, especially within the public sector, must be explainable. This ensures that AI systems used in public administration are transparent and fair, allowing citizens to understand and challenge decisions affecting them.

Future Scope of XAI

- Regulatory Compliance

As AI regulations tighten globally, organizations must adapt to evolving laws, ensuring their AI systems remain transparent and accountable to avoid legal repercussions. - Healthcare & Finance

XAI will be critical in sectors like healthcare and finance, where AI models are used for risk assessment, diagnostics, and decision-making. Transparent models will increase trust and ensure reliable, unbiased outcomes. - Human-AI Collaboration

As AI becomes more integrated into decision-making processes, explainability will enhance collaboration between humans and AI, allowing individuals to trust and confidently rely on AI-driven insights. - Ethical AI Development

XAI will help promote fairness and reduce bias in AI systems, ensuring that these technologies are developed and used responsibly and align with ethical standards. - Autonomous Systems

In applications like self-driving cars and defense systems, XAI will be crucial for enhancing safety by providing clear justifications for the AI’s actions, helping to build trust and accountability in autonomous technologies.

Conclusion

As AI continues to revolutionize industries, ensuring their ethical and responsible adoption is no longer optional—it’s imperative. Explainable AI (XAI) bridges innovation and accountability, turning AI from a “black box” into a transparent, trustworthy partner in decision-making. Whether in healthcare, finance, defense, or autonomous systems, explainability fosters trust, enhances compliance, and ultimately drives better outcomes.

At ACL Digital, we go beyond building intelligent systems—we enable transparent, ethical, and industry-regulated AI. Integrating XAI into our solutions empowers businesses to make data-driven decisions confidently and clearly. The future of AI isn’t just about what it can do; it’s about understanding how and why it makes decisions. With XAI, we ensure that AI remains a force for progress, innovation, and responsible transformation.

The future is AI—but the future we trust is explainable AI.

Reference Link

https://www.fico.com/blogs/how-make-artificial-intelligence-explainable

Aki Ohasi,Director of business Development at PARC: Learning XAI: Explainable Artificial Intelligence, released on Feb-2019

Xu, Feiyu & Uszkoreit, Hans & Du, Yangzhou & Fan, Wei & Zhao, Dongyan & Zhu, Jun. (2019). Explainable AI: A Brief Survey on History, Research Areas, Approaches and Challenges. 10.1007/978-3-030-32236-6_51.

Advance explainable AI for computer vision with pytorch-gradcam

Related Insights

How multimodal interactions enhance the experience of commerce

How AI Agents will Drive Us from Automation to Autonomy